This is the second in our three-part blog series, describing the tech side of how ustwo went about helping The Body Coach to expand and develop their business to become digital-first with an award-winning app. Find the other parts here: Part 1, Part 3

First sprint

At ustwo, we get tangible from the very first Sprint. Rather than having weeks or months of technical build-up or foundation laying, we want to get our work into stakeholder hands (and ideally users!) as quickly as possible.

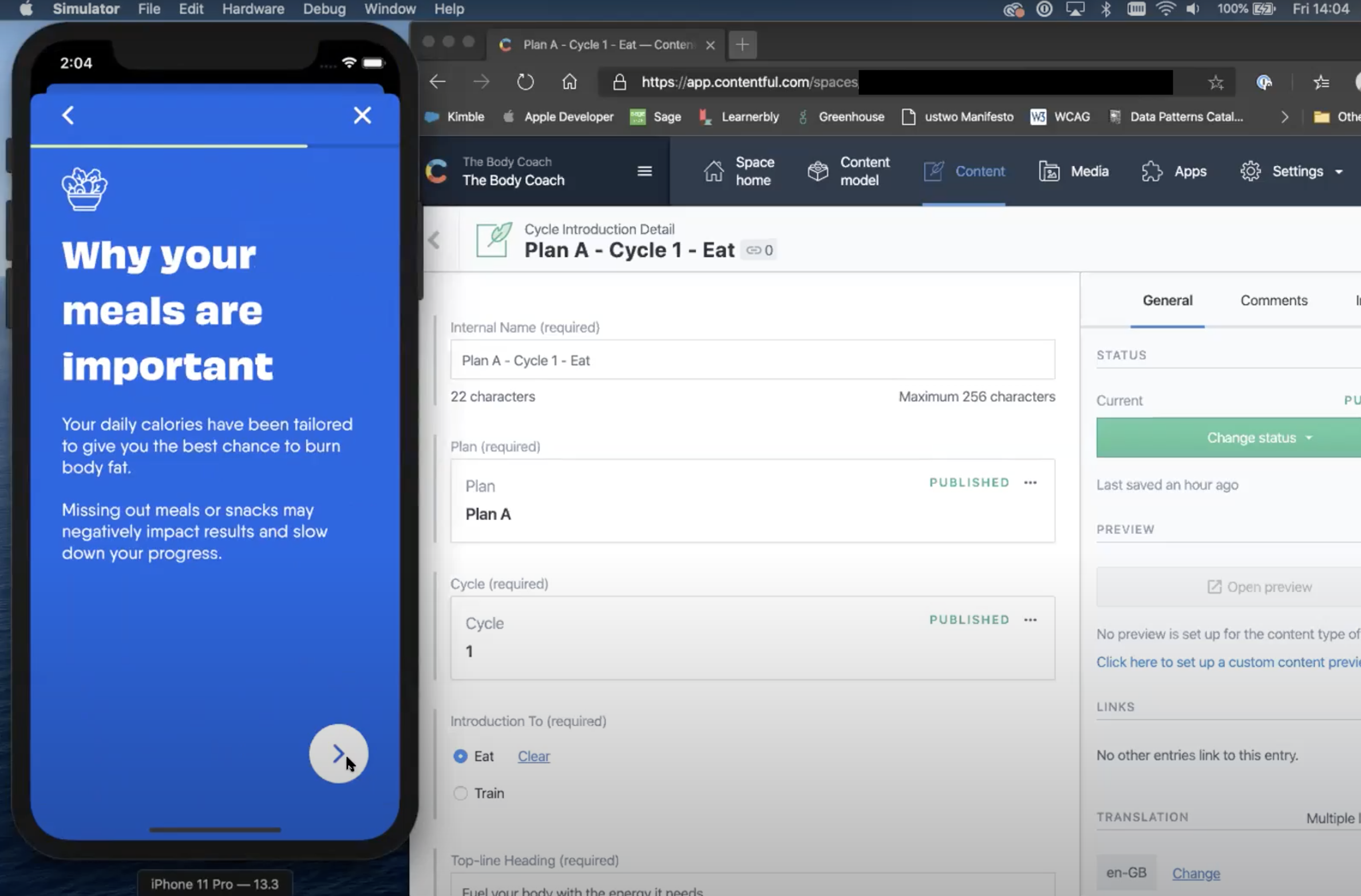

We picked a user story that was both end-to-end but also quite “simple” in terms of the amount of business logic. We made it clear to everyone that since this was the first story to be built, it would need revisions later. In our case, the best candidate was the Intro to Eat feature, where users could learn more about the food component of the plan, how the recipes are created, and recommendations on ways to think about healthy eating. Essentially, the iOS app would query our backend for the relevant content for that user which, in turn, would query our third-party CMS Contentful and return the subsequent content for display to the user. From an experience standpoint, this would involve some basic content set (a series of pages of content with titles in an ordered sequence), pagination at the frontend (though all content would be returned in one go from the backend) and entry and exit call to actions.

To do this, we needed to create the initial iOS app in Xcode, set up our AWS AppSync API backed by Lambdas and our first models in Contentful. All of our backend infrastructure was managed by Terraform at the outset.

To ensure we could actually get this out in a single sprint, we limited the amount we would automate and built up our tooling as we went (rather than frontloading it). While we could manage all our infrastructure as code, we didn’t set up the Continuous Deployment (CD) pipeline from the start. Instead we used terraform apply locally and added that CD pipeline a Sprint or two later.

We made similar choices on the iOS side. Rather than setting up a multi-framework architecture with a detailed design system on day one, we picked a fairly simple internal architecture and gave ourselves the permission to evolve it as we built things out. That way, we’d have a clearer idea of what was actually needed rather than what might be needed.

Alongside this, we needed a robust mechanism for distributing these early builds of the iOS application. We knew we’d also be running a beta program later, once we had a core set of features built. Thus we also got the basics set up in Apple’s App Store Connect and distributed our first TestFlight build to stakeholders by the end of the Sprint. As with Terraform, we did this quite manually in the first Sprint and slowly increased the amount of automation as we went.

In addition to building up an enormous amount of trust with our stakeholders by making it tangible from day one, we were also able to demonstrate the complexities involved in software development clearly, so they could appreciate the effort involved. Instead of regularly being asked “How hard could this be?” we received questions from our stakeholders such as “How could we simplify this to speed up development?” or “What is an alternative approach that could achieve this same outcome?”. These are much more effective questions from stakeholders to get the best out of their product team and have a more productive relationship with them. Our Delivery Director Collin has written an excellent free book on this and many related topics, titled Make Learn Change.

Doing an end-to-end slice from the first Sprint unlocked another critical aspect for us. It allowed us to much more accurately estimate future work, predict challenges, anticipate technical overlap between features, etc. This was equally important from a project planning and roadmapping perspective as it was in guiding the way we iterated and evolved our architecture.

Identity Management and Authentication

One of the interesting challenges we tackled early on was a classic product development foundation: the authentication flow. At ustwo, we often try to build out many other core user journeys before bringing this in. While you often can’t release an application without it, from a user’s perspective, this is often the least important journey. However The Body Coach relies on having tailored plans for the individual — without it, there’s no experience. Hence we prioritised it as one of our first whole journeys to be built.

Our team had experience designing and building passwordless flows, where a user enters their email address to Sign Up or Log In, receives a message on that address containing a link or a code that they use to prove their identity as the owner of that email address. This project was going to follow this passwordless trend. Projects By If have an excellent overview of some of the user experience benefits of a magic link authentication system. This also enabled us to have verified email accounts at the point of account creation rather than ending up with some accounts in a transitory unverified state.

Our intentions at this stage were to let AWS services integrate in their intended way, and hopefully benefit from a security and developer experience point of view. AWS Cognito integrates directly with our AppSync GraphQL API, so once users have a valid authentication token issued by Cognito, they can access all manner of Queries and Mutations to fetch or edit their data, respectively.

AWS has written an excellent post on Implementing passwordless email authentication with Amazon Cognito, if you’re interested in a bit more technical detail around the implementation.

Production deployment strategy

In those early months fleshing out the architecture, we’d set up a system using Terraform Workspaces that allowed us to create whole environments easily. The system tightly coupled git branches with environments and GitHub Actions could run Terraform for us.

With all of that in place, creating a complete copy of our system (for development or testing a feature without disrupting shared environments) was as easy as creating a new branch, pushing to GitHub, and waiting a moment for the infrastructure to be created. Pushing new code on a branch would update that branch's corresponding environment only.

This workflow was hugely valuable for developers (confidence in our changes, isolating separate parts of the system), and for the business (disaster recovery mechanisms being regularly practiced).

The model was quite simple, neat, maybe even naive. One aspect proved challenging though, and resulted in some interesting complexity a few months in: adding the concept of Blue-Green deployments to the mix. This is a way of shifting traffic between two environments (named with the corresponding colours) to get near to zero-downtime when bringing new code to production.

In a Lambda-Serverless context this wasn’t such a well-trodden path. There are some blog posts and interesting ideas, but most did not cleanly fit our composition of AWS-managed services and our repeatable, flexible environments. For example, AWS recommends using API Gateway for shifting traffic between environments, but we’re not using API Gateway. Different environments don’t really share infrastructure.

This was where we had to make a compromise for the production environment. We thought the Compute and API Layers, Lambda and AppSync and most other resources that support them, should be separated into “prod-blue” and “prod-green”. State, such as those found in our RDS Aurora cluster and our Cognito User Pool, would need to be shared between the blue and green environments.

To implement this, we had to make a significant crease in our simple approach for deployment. We set up a separate Terraform project/repository to manage these shared “central” resources for production, and added a variable that dictates which environment is “primary”. We then had to introduce conditional logic to our original repository to special-case the shared resources in those environments and fetch references from the “central infrastructure” repository. As it turned out, there were other benefits to this as well. A number of AWS systems are configured at the AWS Account or AWS Organisation level. Having a central repository for managing the Terraform code for these top-level configurations was an added boon.

In some ways, that complexity spoils the naive approach, but the results from setting up Blue/Green deployments in this way have been positive and totally met our needs. We haven’t needed to worry about the lack of flexibility around traffic splitting.

In part 3: We lay out what we discovered and achieved in the last four months to launch.