This concept was developed by Arnau Siches, backend engineer, and Jarod McBride, developer in test at our London studio. Please join the development of Mastermind, or give us feedback, on GitHub.

In the past, we’ve blogged about more technical stuff, but we wanted to bring our thoughts to the main blog so we can make things a bit more accessible. With our development-oriented posts we try to keep things straightforward, without losing necessary technical language, or by dumbing things down. If you want to talk, or ask about anything we discuss here, just get in touch!

Testing is hard. Mocking up a service to test against is even more difficult. Doing all this when the service is under development and constantly in flux is downright maddening. There are many reasons to create a system that acts like your live backend services, but that responds in a prescribed way. These types of system are not only used for QA/Testing, but for active development, user testing, and demoing for clients and stakeholders. To put it simply, a mock service is a known entity guaranteed to respond in a predictable way.

These types of mock system can take on many forms. Most of them are built to mimic systems that are already in place, or currently being developed. The problem is one of work duplication. To be usable, the system must be able to handle whatever request is thrown at it, and know the right thing to do. Most of the time all that’s needed is control of one single part, but when mocking an entire service everything must be covered. This requires a great deal of overhead and maintenance.

One solution is to build a special version of the app that includes mocking, or hooks into the app to make it more testable. The problem with this is that what is being tested is no longer the real app that the end users will get their hands on.

How it was before

Previously, part of the project set-up would be to find a way to mock the backend services. Ideally, this would have been some kind of container that could be run locally for each developer/tester/CI. But this rarely happened with client work. At that point, it was time to find a solution for the problem of mocking. This often involved setting up a local system that the programme in test/development would point at, and which could be controlled by hand. This often took the shape of a Sinatra server that would serve up some static responses when certain endpoints were called, or returned error cases (e.g. 500, slow response).

Of course, this can be tedious and takes away from time spent developing or testing products already being worked on. On top of that, it can be unstable and require manual updates if the API changes. This method also requires that each of the endpoints being used are mocked, even if they aren’t part of what’s being tested/developed. This creates more work and possible fragility. Really, it’s just frustrating and takes time away from testing or developing.

The system in the middle idea

While ruminating over this problem – and possible solutions – it was proposed that what was really needed was a system that could watch for certain URLs and intercept them, returning a specific response. After some discussion, we realised that what was being described was very similar to a ‘man in the middle’ attack. In the hacker world, this could be used to do nefarious things, but it could also be used to help improve the workflow, and development pain points the team was currently experiencing.

Tools such as Charles already provide this, but Charles is not very scriptable – and this makes it hard to use for automated testing. Then someone pointed out mitmproxy. At first, it seemed like overkill. But after some rough prototypes, it became clear that it was going to be a viable solution to fill the need. So what does this system look like?

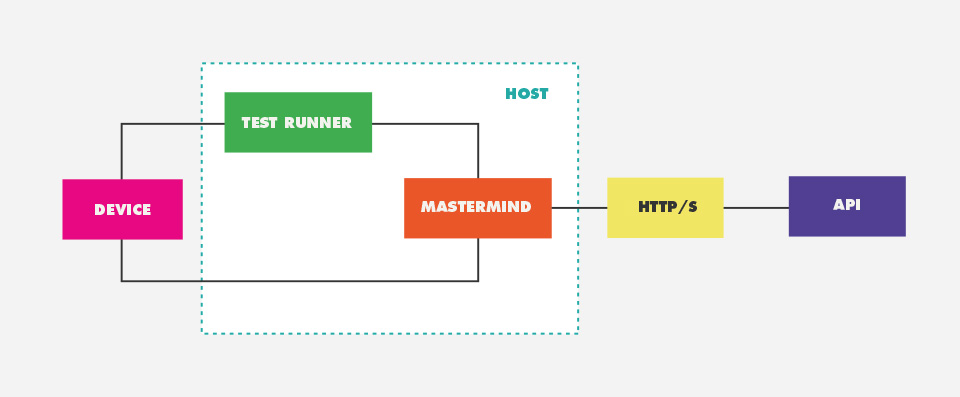

Mastermind is a command line interface, on top of mitmproxy, that allows you to start the proxy with a predefined mitmproxy script in order to simplify its usage. There are three modes you can start Mastermind with: simple, driver or script.

Simple mode

Simple mode is the quickest way to mock a HTTP request. Just get Mastermind to pass the URL you want to intercept and the JSON response you want to return. Done. Check the project readme for an example.

Driver mode

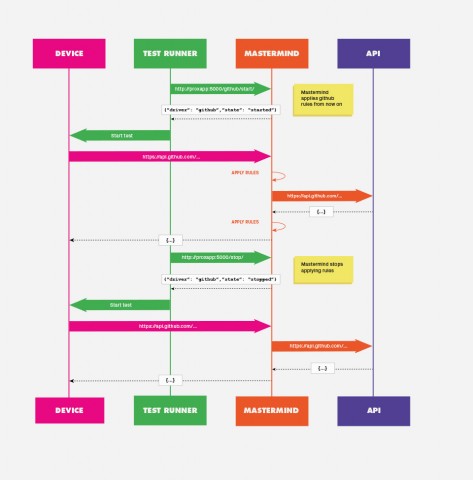

The driver mode expands what the simple mode offers. It allows you to define a set of rules in a YAML file, where each rule describes the URL to intercept, the headers you want to add or remove – before doing the real request – the headers and body you want for your mocked response, etc. You can even skip hitting the remote API if you are only interested in the mocked response.

To allow different rules for a given URL you have to have different drivers (different YAML files). To load a driver you have to use the Driver API which offers a couple of endpoints to start and stop them. So, a Test Runner (e.g. Xcode) can warm up the proxy with a particular driver before starting a test. It makes it portable and usable in a multitude of environments.

Finally, you can enable response validation using JSON Schema against the response received from the remote API. This is convenient when you want to guard yourself against broken API contracts.

Script mode

The script mode offers the same mechanism as mitmproxy or mitmdump. It is intended to act as a transition step, before moving to the driver mode, if you have already used mitmproxy and have scripts created.

Roadmap

Mastermind is an initial effort and, therefore, does not solve every problem in this space. It doesn’t solve, for example, the potential slowness of having to do full HTTP roundtrips, although for some cases you can benefit from using the skip property. It also doesn’t provide an automated way to create or maintain the mocked responses. None of these are deal-breakers for using Mastermind but are pain points that we have found when mocking backend services. So, with this in mind, our current thinking involves doing the following:

- API introspection (record and playback requests/responses)

- Allow tests in parallel (if possible)

- Run on hosted CI (Travis, Circle, Bitrise, etc)

- Provide better logs.

Conclusions

We are using Mastermind to test the hypothesis that having a man in the middle style system will make it easier to mock existing and new backend systems. So far the initial response has been positive with our local users. This is why we are opening it up. We want to see if others are having the same pain points as us and if Mastermind is a possible solution. We intend to continue to grow and evolve Mastermind as we get new feedback.