This blog post is a guest piece by Manisha Jangra and Sinan Arkonac, recently graduated Masters students at the University College London’s Interaction Centre. Last summer, ustwo Auto teamed up to work on vehicle autonomy research and design with several students from the UCL on their Masters in Human Computer Interaction. Below you will find some results of this combination of forces. Illustrations by Shashi Kahlon.

If you have paid attention to the news about driverless cars lately, you might think that amazing driverless cars are just around the corner, and driver’s licenses will soon be as valuable as a Blockbuster membership. While it’s easy to get carried away with this shiny future, a more objective look will show that we aren’t quite there yet. Fully autonomous cars are being tested, but they still have their rough spots and consumer ownership carries many more questions. Looking at vehicles on the road today, the ones with autonomous capabilities are still quite limited in their abilities. They can maintain a certain speed, keep their distance from another car, and stay in lane (if the weather is good and the roads are well marked). However, in cases where they cannot do so, they require the driver to take control.

Imagine a car with two steering wheels and two sets of pedals. Unless only one of you did the driving, getting anywhere with a friend in the second driver’s seat would be a nightmare. If you decided to share control of the car, the communication between you and your friend would need to be fantastic. Any mistake or miscommunication could be catastrophic. Now imagine that in the second drivers seat is a robot. The robot isn’t very good at talking to humans yet, and it doesn’t quite have all the skills for a driver’s license either. This is essentially what the semi-autonomous vehicles (SAVs) of today are.

In the past year we have looked to tackle the issues that this awkward but very useful robot can bring to the world of transportation with a lot of research and bit of design thinking.

THE PROBLEM

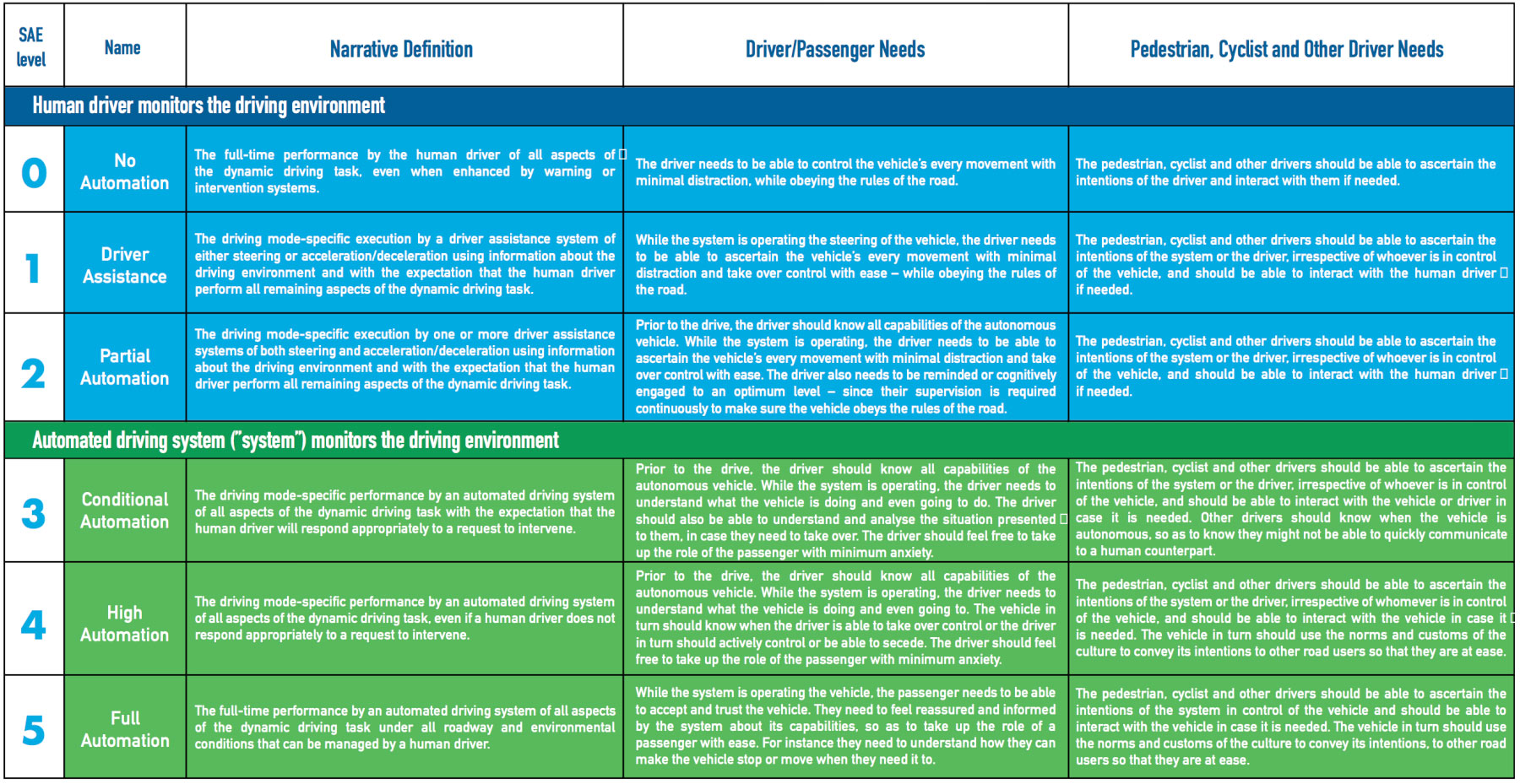

Looking at the current batch of self driving cars that are being sold to consumers, we can see that they are not that great at fully driving themselves. In the spectrum ranging from your grandma’s car to minority report, these cars are somewhere in the middle. More specifically, the vehicles available to consumers today are at level 2/3 out of 5. These five levels have been identified by the industry and provide the guidelines used to describe autonomous vehicles.

For reference, here is ustwo Auto’s handy interpretation of these industry accepted (SAE) levels of autonomy, which adds the needs of the pedestrian, cyclist and other drivers.

Level 2 of autonomous driving can be very tricky. At this stage the vehicle depends on the human to takeover if something is going wrong, while still lessening the driver’s mental load when it’s working. The tension between these two ideas means that designers and engineers of these systems have to be extra careful. If drivers of level 2 autonomous vehicles get too comfortable, it can lead to injuries or even loss of life, as we have seen in the case of the fatal Tesla accident. It cannot be understated how important it is to be careful with the applications of autonomous driving technology while it is still incomplete (under level 4).

One serious pain point of this level of autonomy is the process of the vehicle handing over control to the driver. This handover will happen when the vehicle has trouble reading the environment around the vehicle and needs to rely on the human driver. The issue with this is that it is throwing an unsuspecting human into a complex situation where they need to both figure out what is happening and react to it quickly. At this point it is important that the driver has developed a good mental model of how their semi-autonomous vehicle works. In the same way that your older relatives might have trouble with new tech devices, drivers could have issues with new semi-autonomous vehicle technologies. Although, the difference between those two examples is that one ends up with accidental selfies, while the other could lead to serious accidents.

This need will also be true in the reverse in that after vehicles reach level 3 of autonomy, it will become increasingly important that SAVs have an accurate mental model of the driver’s behaviour. If they do not, the vehicle could misjudge the drivers state or actions and be responsible for a serious accident.

GOLDILOCKS ZONE STUDY

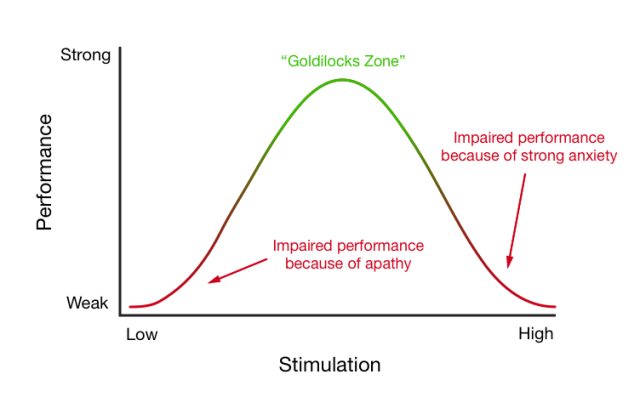

To begin cracking away at this problem, we researched models of human performance on complex tasks. We were pleased to find a simple but well established phenomenon known as the Yerkes-Dodson Law. To put it plainly, this law states that the relationship between the stimulation levels of a person and their performance on a complex task is bell shaped. This means that when a person is bored and apathetic or hyper aware and stressed they will perform poorly. When the person is stimulated, but just not too much, they perform these tasks best. To put it even more simply, there is a “Goldilocks Zone” of stimulation for complex tasks. This model would be helpful to understanding human performance on the complex task of driving a car, especially when control over that car is given up and handed back. That being said, we had to begin with testing the validity of applying this law to drivers of semi-autonomous vehicles.

We built a simulator and programmed several runs so that we could have participants go through situations in a controlled setting. We had various tasks that the participants in our studies had to respond to such as red lights or lane changes. In order to influence workload and stimulation levels we used distracting secondary tasks such as videos and memory tests. By doing this we could get a sense of a wider range of drivers’ stimulation levels and workload demands. Using the data we collected we wanted to find whether there was a link between the drivers performance, autopilot usage, and workload. By collecting all of our results in raw data we were able to go beyond qualitative evidence. Gathering quantitative evidence gave us hard numbers that we could point to so we could better understand the split second decisions of drivers.

HANDOVER PROCEDURE STUDY

In a separate study we wanted to explore whether changing the handover procedure itself would affect task performance. Following handover there is a period of adjustment where drivers are still regaining their awareness of the driving situation. There are certain factors that could affect situational awareness and different levels of awareness emerge at different times. Figuring out if changing handover could have an effect on these factors and in turn driver performance was valuable as well.

Using the simulator, we looked at how handover effects performance on critical tasks such as braking and lane changes. Re-adjusting to changes in steering sensitivity requires a level of situational awareness that takes longer to acquire following handover. We also explored whether the length of time spent in autopilot mode affects how long it takes to regain situational awareness. We also explored whether using our own method of handover, called ‘stepwise’ that gives drivers control of the steering first before handing over control of the pedals, to the current method of handing over all controls at once would help reduce the time taken to regain situational awareness and mitigate the effects of autopilot duration and task type.

THE RESULTS

The results from our Goldilocks Zone study showed that a significant amount of drivers turned on autopilot when they were overwhelmed, and many turned off autopilot when they were bored with the tasks given to them. On top of this we caught drivers performing tasks such as red light responses worse when they were overwhelmed with their workload. This unconscious response was fantastic for our hypothesis because it found some truth to our model of driver performance. The drivers subconsciously kept their stimulation levels in the “Goldilocks Zone” by giving and taking over control from their vehicle. Alongside that there was evidence that drivers performed better because of this.

The results from the Handover Procedure study found that, the longer the participant spent in autopilot mode, the slower their reaction time was for red lights. This effect was reversed for lane changes when there was a longer duration between handover and task as this gave them a sense that their driving circumstance had changed over time, increasing awareness of changes in steering sensitivity. Using different types of handover procedures did not have any effect, likely because the handover occurs seconds before having to respond to a task. A few seconds is not enough time to develop awareness of the situation – a more integrated solution is needed.

These two results made different but complementary contributions to our understanding of SAV interactions. The first study reveals how drivers’ desire to voluntarily take over control can be affected by the world around them, and the second shows us the potentially dangerous circumstance of compulsory handover of control. Looking at different circumstances of drivers’ reactions and performance with SAVs proved valuable. Our research shows that in all circumstances, situational awareness is essential for safe driving, and that this situational awareness can be influenced by the environment of the driver.

DESIGN IMPLICATIONS

In our research, we found that it is going to be just as important for a SAV to be looking at the driver, as it is for it to be looking at the road. Using this new found knowledge, we looked to find solutions to SAVs’ biggest problems. We were able to isolate several things we could change about SAVs that would help progress the industry.

One main issue the vehicle should look at is driver distraction. While this is obviously an issue in vehicles without autonomy, SAV’s can provide a greater risk of distraction due to an opportunity to decrease the mental workload of driving. In order to counteract this, it would be good for a vehicle to be aware of a driver’s attentiveness and potentially nudge them into the Goldilocks zone of stimulation.

In our research we also found that shorter durations of autonomous driving provided less opportunities to be distracted by a secondary task. This translated to drivers having better situational awareness overall. A system that could handover control to the driver in order to keep them actively involved in monitoring the environment could solve this problem. It would also make sense according to the model of driver performance we had tested.

We also found that a system could work in the opposite direction by asking to take control from the driver when it senses that the driver is distracted, and the environment around the car would be too demanding for the driver to safely navigate. This could save a lot of lives by preventing drivers from trying to handle too many things at once.

THE JOEY

After reading up on ustwo Auto’s brilliantly designed Roo, we thought we could create our own. We packaged all the knowledge we gathered from our research design implications into our design prototype of an SAV. We’re calling this prequel to the Roo, the Joey (the name of a baby kangaroo).

The Joey is a level 4 Semi-Autonomous Vehicle. What this means is that while it has the capabilities to drive itself through complex situations (turning, stop signs, and intersections), it will still occasionally need help from a human if it is having trouble. While this may seem less ideal than a fully autonomous vehicle like the Roo, the Joey would be available to us much sooner, as the technology is available to us today.

The Joey will have systems meant to understand the driver’s situation, and provide the driver with safer and more intuitive options than current SAV’s. Using our research on situational awareness, workload, stimulation, and performance we have designed systems specifically for the safety of drivers in SAVs.

The Joey would have a multitude of sensors to understand the driver’s stimulation and workload levels. This could mean heart rate monitors or just a driver facing camera with facial recognition software. On top of this the Joey would have sensors to understand the driving environment. This could mean microphones to measure noise and music levels as well as conversations happening with the driver. The Joey would even take into account if there were any cell phone calls coming in. While the Joey would obviously be monitoring the road and traffic conditions outside the car, it would also be measuring it for how easily a human could take over at any time.

With all of these sensors and measurements, the Joey would be react to two conditions. If the Joey senses the driver is overstimulated and overwhelmed, the Joey would offer to take control of itself so the driver could focus on doing tasks that do not carry risk to the people in the vehicle. On the other hand, if the Joey senses that the driver is bored or under-stimulated, it would look for ways to stimulate the driver. This could mean playing music, offering to call a friend of theirs, or even starting a conversation with the vehicle’s voice interface. If the Joey senses that the road conditions ahead are safe enough, it could even offer to give control back to the driver so they can be stimulated by the act of driving. Of course no two drivers are the same, so all of the sensor data and driver response success would benefit from a machine learning platform that would adapt this system to better cooperate with each individual driver’s behaviour.

While the Joey could be used with these systems, drivers would also have access to a simpler mode of driving. In this mode an alert system that provides situational updates such as changes in speed or direction and offers opportunities to takeover driving control at points where the risks of doing so is low. This maintains situational awareness, while also allowing the driver to regulate their workload and stress levels. Allowing the driver to decide when they want to take over driving would give them a sense of control and security, building trust with the vehicle. The design would also take into account the optimal period of time between alerts. The alerts should occur frequently enough to provide informative updates while still leaving enough time between alerts to maintain an enjoyable and stimulating experience.

CONCLUSION

Overall, there are some issues that need to be addressed while the industry moves up to provide more autonomy to vehicles. Many of these issues seem to derive from a lack of understanding the human in the equation. Driver awareness, understanding of, and communication with semi-autonomous systems are difficult problems that require different skill sets. While the industry develops more ways to understand how a vehicle should react to traffic and the road in front of it, it has much to benefit by also looking at understanding the driver. It is our hope that the industry can learn from our research and design ideas, and that will translate to better and safer semi-autonomous vehicles.

This study features in ustwo Auto’s new book ‘Humanising Autonomy: Where Are We Going?’ which explores how human centred design can help tackle barriers to autonomous vehicle adoption. Download the book in full for free here.