When I worked in the UK’s All-Party Parliamentary Group on AI, many of the experts who presented around AI’s potential impact shared a common concern: that because of people’s impressions of AI from movies like the Terminator franchise, they often associate AI with killer robots, not useful computer programmes that can help tackle global problems.

One frequent suggestion was to avoid using Terminator-like humanoid depictions of AI in the media. I remember thinking, “You can’t erase these images from peoples’ brains.” They’re deeply ingrained, especially if these films made a profound impression on us when we first watched them.

Instead of viewing these deep-seated associations as a roadblock for development and adoption of AI, what if we used them in the discovery and design process for AI projects to gain insights into ways people might connect with and feel about this technology?

We recently tested this approach during work on a project creating a coherent AI product vision, and it turned out to be simple, insightful and fun. The exercise can provide insights into what types of AI your particular users would be comfortable with and what boundaries you shouldn’t cross. This will help you generate ideas for the look, functionality and overall coherent vision of a product – ultimately bringing more value and creating a better user experience.

Seeing AI through a pop culture lens

When working on projects involving AI, ethics and alignment are a top priority from the get-go because of special considerations and risks this tech inherently poses, like the possible consequences if left unchecked, bad actors, or creative teams that aren’t diverse enough to prevent biases. Part of this is making sure we align the future product with human values – our users’ and our own as creators, too.

After the tricky process of aligning our values came the more exciting and fun stage – the ideation process. We had a lightbulb moment about a unique new way to gauge how people might react to an AI product: what if we explored how people feel about familiar references to AI in popular culture? Could this give us a clearer picture of how they think and feel about AI – ultimately helping us build better and more approachable products?

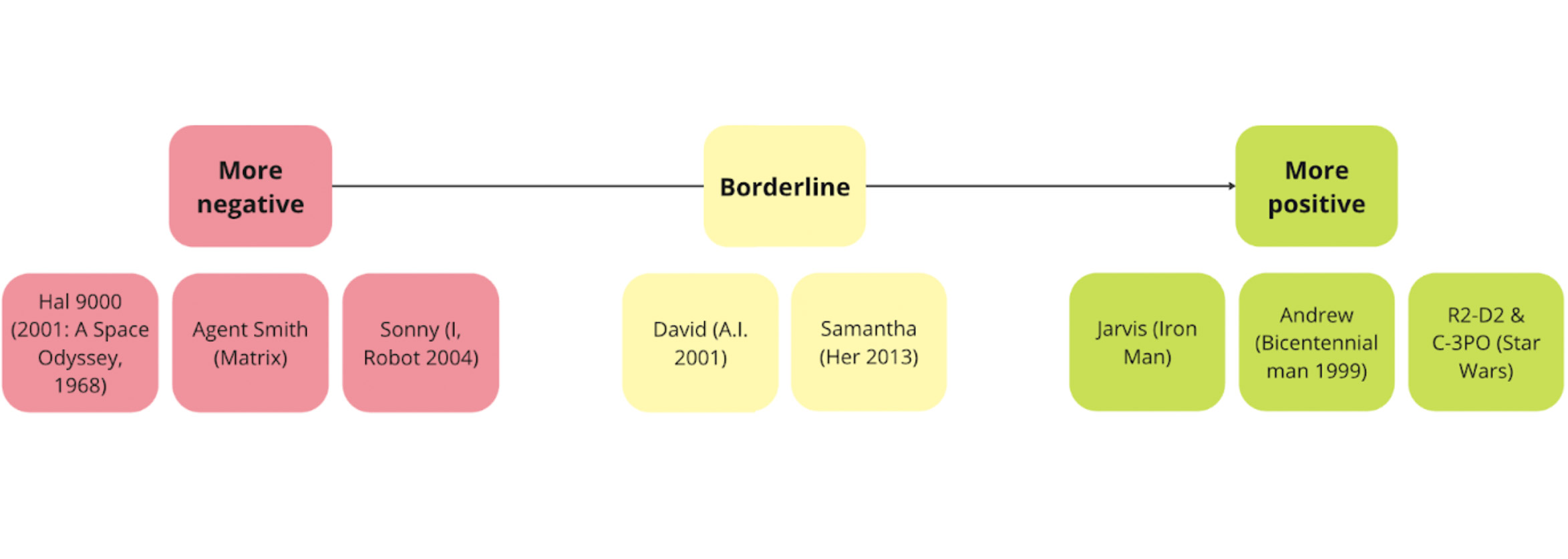

So, we asked a test group about their familiarity with movies about AI, robots or autonomous agents that can think, talk or somehow interact with humans. We then asked them to place the AI characters from these movies on a spectrum of “more negative” to “more positive” based on how they felt about them.

Based on the responses, we classified the sentiment towards characters including Terminator and HAL 9000 from 2001: A Space Odyssey as “more negative” and characters including C-3PO from the Star Wars franchise as “more positive”. A group in between the two, “borderline”, has characters that invite complex ethical questions about creating this type of AI, like Samantha from Her.

We asked the test group to explain why they put characters into certain buckets. What exactly makes these AI characters more or less acceptable to them? Is it something in the way they look or interact with humans? Does it involve their superhuman abilities, boundaries or power dynamics with humans? Questions like these can help the potential user articulate their own perceptions of AI, which ultimately helps you define the form and characteristics of the AI you build for them.

We grouped this feedback into four broad areas to help us better understand and analyse it: “interface”, “power dynamics”, “boundaries” and “tone of voice”. (Based on how your particular subjects explain why they put the characters into the “more positive”, “borderline” and “more negative” buckets, the broad areas you would use in your research might be different.)

Interface: form / colours / complexity

The interface and aesthetics of movie characters, from the colour palette to intricate metallic details, don’t just add visual appeal – they both sculpt and connote a character’s persona. For example, people often find the glowing red light in the eyes of characters like Hal or the Terminator as indication of a malevolent intent.

Humanoid forms strongly influence how people perceive these AI characters. While C-3PO’s gesturing with human-like limbs and gesturing make it seem comfortingly relatable, the winking of Sonny in I, Robot adds to its menacing aura.

Whether or not a character has a humanoid does not make audiences see them as “more negative”, “borderline” or “more positive”. At the same time, Samantha’s absence of a physical interface doesn't make it seem any less powerful or sophisticated in its ability to interact with humans and manipulate their behaviour, while a character who has a humanoid form but a less advanced operating ability, like C-3PO, represents less of a threat.

(Audiences also tend to “gender” AI characters like Samantha based on characteristics like form or voice, but I’ve referred to all of them as “it”, since according to ethical best practices, AI programmes should not be presented to users as gendered.)

Power dynamics: servant / peer / superior

The “more positive” AI characters are exemplified by dedicated servants like Jarvis: they go above and beyond to be helpful, never pass judgement, make people laugh and sometimes even sacrifice their own existence for their masters. They almost seem like friends to humans that still understand their boundaries and don’t ask for anything in return. We also noticed that audiences usually accept the transition from servant to peer in characters like Andrew from Bicentennial Man, particularly when it’s gradual.

However, there’s a clear limit to how much agency and critical judgement people are willing to accept in AI, as exemplified by characters like Sonny. Characters who have power or control over humans, like Agent Smith, are typically viewed as threats.

Boundaries: ubiquity / presence / function

“Boundaries” – how present AI characters are, how much power they hold and whether they display a sense of agency – greatly impact fear or trust. For example, the omnipresence of HAL 9000 within the spaceship immediately instils fear in people. On the other hand, Jarvis’ rule in Iron Man’s house makes him appear helpful, not threatening.

Machines like R2-D2 which were designed to serve a clear utilitarian function tend to appear much more limited in their presence and thus more trustworthy (unless, of course, if their sole optimisation goal is to destroy the human race like the Terminator).

More insights, from tone of voice to body language

Another of the broad areas we used to group responses was “Tone of voice: respectful / warm / cold-blooded”, and unsurprisingly, characters with more calculating or malevolent demeanours ended up on the “more negative” end of the spectrum. Meanwhile, David seems to express emotion, with human-like experiences such as attachment or hopefulness, and was placed on the “borderline” part of the spectrum. This might show that users don’t necessarily want to relate to AI interfaces on an emotional level, or share these “human” feelings with them.

Other characteristics outside of the broad groupings, like body language and expressions, can also uncover intriguing insights. Consider the deliberate, composed movements of Agent Smith in relation to C-3PO's clumsy and nervous gestures. This can give valuable clues for understanding user preferences, which can significantly inform both the design of AI interfaces and movements, once it becomes technologically feasible.

While she isn’t an AI persona and wasn’t analysed in our test of this approach, “Stereotypical Barbie” from the blockbuster Barbie movie follows a similar journey of growing awareness and agency to the journeys of many AI movie characters. You can do a deep dive with your users about what makes this “living” doll so endearing and relatable, especially at the movie’s ending. One question to consider: what about Barbie makes us welcome her conscious (and emotional) choice to become a “real” person – breaking free from essentially being “programmed” based on how a human plays with her as an object?

How familiar associations can inform your product vision

When trying to disrupt the nascent world of AI with fresh product thinking, you can learn from and build on people’s perceptions of AI from popular culture to create products that your particular users are not only comfortable letting into their lives, but also eager to engage with on a daily basis.

Remember, every small detail, from reassuring that an interface is non-threatening, to a voice that exudes warmth instead of over-confidence, has the potential to captivate or alienate your user. Let’s build on familiarity to innovate and deliver experiences that inspire and resonate with users, opening new frontiers in the way we interact with technology and create more human-first AI products.

Find out more about our perspectives on human-first AI.